In this post, I present my impressions of this book I recently read.

Why Am I Reading It?

To start, I think it’s important to give some context, as things cannot be interpreted absolutely. In the book’s preface, the authors state that the book is targeting a wide audience, from academics to practitioners, from undergrads to graduate students, all of them trying to learn how to conduct cybersecurity experiments more scientifically. This was not exactly my case. Not because I already knew how to do it perfectly, this is false, but because I’m reaching the end of my Ph.D., so I already committed most of the pitfalls presented in the book during my research journey. Therefore, my reading of the book was more retrospective and my expected gains are more in my future decisions than in my current ones.

Said that it is important to say that my interest in this book came from my recent interest in the “Science of Security” issue. I have faced some scientific “dilemmas” in some recent papers (many still in review) that made me question the nature of scientific pursuit in cybersecurity and a book on the subject sounded like a perfect match for my current interests.

Finally, it is important to say that sooner this year I published a paper (link here) that touches many research challenges also discussed in the book. I don’t like to promote my work when talking about someone else’s work, it sounds like you are praising yourself and not the author you are discussing, but I think the fairest action to do is to tell the reader that I had my own paper in mind when reading the book because…that what I had in mind when reading the book! My paper is definitive less formal than the book, but it seems enough to say that I was not completely new to the field, so consider that when reading my comments.

Following I discuss my impressions chapter by chapter. They are based on notes I took when reading the book, some aspects are discussed in multiple chapters. They are not a summary of the chapters but pinpoint some issues that got my attention.

Chapter 1

The book starts already touching the “Science of Security” issue. It mentions the NSF initiative towards the scientific development of the security field and the JASON report. I think it is a pity that these initiatives and reports are not so popular. Before this book, I only have heard about them in a few papers, all in the science of security context. It seems they didn’t reach a broader audience, and this might explain why the situation seems to be the same nowadays and by the time they were published.

The book then starts a comparison of cybersecurity with other sciences. It states, for instance, that security science is created by humans, thus it is not easier than life sciences. I agree with it and I would even say more: It might be methodologically harder than life sciences and natural sciences because in security we have no (hypothesized) “fixed laws” that drive the behavior of attackers. Instead, they are free to do whatever they want to conduct an attack. In this sense, computer security seems to me methodologically closer to psychology or other sciences involving human behavior than to other sciences.

The book reaches a similar conclusion in the next paragraphs when it says: “computer viruses, unlike life science ones, can read published papers to defeat defenses”. I laughed a lot. I’m only unsure if attackers really read the academic papers, although it is theoretically possible.

Another aspect discussed in this initial chapter is the availability of data. The book claims that there is more data than we can analyze, so we have to adopt a smart approach. I partially agree with it. On the one hand, when there is data, there is a lot of data, thus many big data approaches were proposed. On the other hand, it is not always that we have data, and the book gave me a little bit of this impression. I’m my experience, I worked with more cases with data collection limitations than with full data available. As I discussed in my paper, there are many difficulties in data collection and experiments reproduction. For instance, the use of private datasets tied to companies and shared under specific NDAs.

Chapter 2

In this chapter, the discussion about data is refined. It states, for instance, that “the problem with generated data is you don’t know the quality of data”. And I agree with it. In my paper, I also complained about the external validity of some works because of the lack of dataset representativity.

The chapter evolves as a summary of “data collection” challenges and pitfalls. In many moments, I was reminded of my own experiences. For instance, when reading about some decisions taken as truths, like assuming something is malicious because it is on a blacklist even when nobody knows to explain why it is there. I feel that security researchers (we) do that all the time.

Another statement that got my attention was “fuzzing is the art”. I see the beauty of seeing things are art, but the goal of the science of security seems to be to make things less art and more scientific, I’m aware that many authors see Security as art. For instance, Matt Bishop also does that in his book “Computer Security: Art and Science” (link here), but I tend to disagree. How much heresy is it?

The part of this chapter that made me especially happy was when signatures were discussed. It showed me that the book was grounded on real life, not just in models, because signatures are still widely used in practice. but often neglected by the academic papers I’m used to reading.

The chapter is finished with a discussion about human factors, an area that has been growing significantly recently. I think this is very important. In the future, this and future chapters will have to be expanded with greater ethics considerations, especially given the recently identified cases of “polemic” studies (I will not name them, but everyone knows).

Chapter 3

This chapter is the chapter that most discussed the “scientific” issue of science of security, this was the one that probably most interested me by this time. It is suggestively named “In search of truth” and discusses exactly that, what is the truth from a scientific perspective. And the answer is that it depends on context, it is not the same “truth” from the logic preposition.

This need for context is following reinforced via a problematization of ground truths. I tend to fully agree with it, especially because I wrote an entire paper about Brazilian malware (link here) just to claim the importance of context.

The book then discusses a key aspect: What is security? This is key because if we cannot precisely define it, we can’t measure it. If we can’t agree with a definition, we can’t compare scenarios. So, what is the answer? There is no security! At least not as a natural property. Security is understood by the authors as just the policies that we define, thus it is an agreement between humans. I consider this definition very acceptable, but then I ask myself how can humans agree on the policies? The book does not have a definitive answer and I don’t either.

The remaining of this chapter, as the name of the section suggests, is “A primer on science and philosophy”. It discusses what is truth, reductionism, derivationism, and so on. As for the reports mentioned above, I only recently got in touch with these (and other related) ideas and it was good to see them in a book. I just wonder why they are not more popular. My views of my own practices changed after learning about the epistemological positions. I think I became a little bit more “constructivist” after them. However, there are other “empiricists” that will say that the outcome of a theory does not depend on the practices to produce them, which is also true in a very portion. I think I will have to review more books in future blog posts to give a more complete opinion. In any case, I tend to agree with “Herley and van Oorschot“ (link here) that it should be taught/discussed in schools.

Chapter 4

This chapter talks about “Desired Experiment Properties”, which is a good topic. Reading this chapter was one of the moments where I reminded the first years of my research experience since designing really good experiments has always been hard for me. I appreciated that the book mentions the work by “Rossow et al” (link here) since it was one of the works that guided me through the design of good malware experiments, introducing key concepts, such as the need for a more privileged monitor than the monitored object. I believe that more works like this would be beneficial for our community and special for the new students in the field.

The chapter has a long section discussing consistency, especially internal (variable isolation, so on), but one can also deduce external one (extrapolation to other scenarios) from it. As a malware researcher, the point that most got my attention was “Consistency across time”. Everyone knows that distinct malware samples lead to distinct results, which is a problem. In my paper, I had discussed this issue as an attempt to make malware research more reproducible. For instance, sharing the execution traces to allow the reproduction of the same results. In this book, the proposal is a bit different. The proposal is that results are consistent if they lead to the same conclusion. I think this is acceptable, but it would be great to have more examples (from the book or from other research works) about to which extent this is true. Can we make a consistent observation about all aspects of a malware dataset or not?

One aspect that might impede consistency is that the AV labels change from AV to AV and also over time. A set of trojans today might not be classified as such another day. This can only be solved with what is called by the authors as “Consistency of tools”. As defined, “two tools must give the same result for the same dataset”. In my opinion, this is never evaluated in the security papers. At least, not in the ones I ever read. I discussed that in my paper and pointed exactly to the AV case. In this section, the authors discuss Djikstra’s analogy about computers and computing and astronomy and telescopes. I fully agree with the authors that somehow disagree with Dijkstra (at least in context). Science is also a lot about the tools, and that is the reason I proposed in my paper that security mix science and engineering, especially to the point of engineering solutions must be evaluated using scientific practices, somehow describing the consistency of tools criteria without the proper formalization presented in the book.

To finish the consistency section, the authors discussed what is called “Consistency of the designer model”. In summary, biases are everywhere! I loved it since part of my paper was exactly discussing biases.

The next section in this chapter discusses generalization. The authors start claiming that even Randomized Controlled Trials (RCTs) have problems. I feel that the book has an implicit position that RCTs are considered standard in the field. My experience (anecdotal, obviously) was that RCT is barely unknown in the field, at least from a formal point of view. Most evaluations I see (including mines) are mostly ad-hoc experiments. Anyway, this subject is revisited in future chapters. The authors proceed to discuss the difficulties to claim the representativity of a dataset when trying to generalize. My experience makes me agree with them. Despite all my attempts to claim my dataset of Brazilian malware as a representative, it was hardly ever accepted.

The final section discusses transparency, or, in other words, “do not omit information”. It discusses many important aspects, such as outlier removal, disclosing funding, ethics. What I missed in this chapter was some discussion about disclosing vulnerabilities. As I anticipated when commenting on the first chapter, I believe ethics considerations will dominate future editions of this book.

Chapter 5:

This chapter covers “Exploratory Data Analysis”, or, in other words, it is statistics classes. It is the densest in theory, in my opinion. It covers all the basics of statistics, such as the type of graphs, distributions, and so on. In my view, however, this chapter suffers from the same problem as other materials about statistics: the lack of examples. To be fair with the book, lots of applied examples are presented in the case studies in the following chapters. More specifically, what I missed here was not examples of the distributions or more graphs, but more examples on how to apply it, how to model a system using statistics. While reading this chapter, I remembered of my graph theory classes. I learned all about the algorithms to traverse graphs, but I knew little to nothing about how to model a problem using graphs…

Chapter 6:

This chapter discusses “Sampling” and thus can be somehow seen as a continuation from the previous one. However, it is less focused on mathematical aspects and more on qualitative aspects. An interesting discussion is presented when trying to calculate the population of malware in the world. We only have access to the number of detected malware samples and not to a real total o malware, which includes unknown samples, in a clear case of survivor bias.

The book tries to solve the problem by using a reference number of 300K new malware samples daily detected by an AV vendor. I question even this number since we know little about the vendor’s collection procedures, how many samples are repetitions among the vendors, if the vendors are considering variants or not, if samples reemergence is considered or not, and so on.

The discussion is resumed when discussing an appropriate dataset size. In my paper, I suggested the occurrence of an anchor bias when defining dataset size, but I had no idea how to overcome it. In this book, the authors suggest that statistical significance might be used for it. I agree that it seems to work for surveys, but I couldn’t visualize it being used for other malware experiments (e.g., malware detection). I still feel like if we needed some base rate data, such as the number of malware per user machine, to develop a representative experiment.

Chapter 7:

This chapter, named “Designing structured observations” basically covers experiment design. The authors here resume the discussion about the RCT. The authors claim that RCTs are the most common type of study, and this is against my experience (at least from a formal point of view). I did a “grep” search in the 400+ papers I used in my SoK paper and less than 1% of them mentioned RCT. I know it is weak evidence, but I really would like to understand what happened. Either the papers are not using RCTs or they are using it implicitly (which is more probable). In this case, shouldn’t they be using the proper nomenclature, at least? It’s rare to see a paper mentioning “control group” instead of “baseline” or “ground truth”.

RCT discussion aside, I dialogued well with this chapter. I’d like to point the statement “no system is completely new” to some guys who I call the “novelty reviewers” 🙂 In general, this chapter agrees with what I proposed in my SoK, both in “observational studies are pre-requirements for intervenience” as well as an advice for students “practicing all types of study”, which was what I tried during my Ph.D (not sure if I succeed or not).

The book overall seems very updated with the last trends and it can be seen in discussions such as the “GDPR and the whois polemic”, in which data about registered domains is not available anymore, making some of my malware analysts friends crazy.

To finish the chapter, the book presents a comparison of the RCT testing with the industry practice of A/B testing. This is the first time I noticed the book taking industry as a clear example. Although the authors state the book targets both academics and practitioners, I must admit the book has been much more academic so far.

Chapter 8:

In this “Data Analysis” chapter, the authors present lots of pitfalls, anecdotes, and the base-rate fallacy. I will skip a detailed analysis of this chapter because I covered most of these aspects in my SoK paper and I don’t want to turn this blog post into an ego trip.

Chapter 9:

This chapter is the first of its kind in this book in which the authors present case studies about multiple security subjects. This chapter starts with a “DNS Study”. I won’t discuss the details of each case study, I prefer to highlight some scientific questions that reemerge in them. For instance, the authors claim that it is impossible to make a list of malicious domains because we don’t know what is malicious. In my opinion, learning the limits of something is the best contribution science can give to the understanding of a subject. Following the discussion, the authors raise the question of whether it is possible that a domain had been malicious in the past become benign after moving to another owner. For sure it is possible, which leads to the conclusion that this kind of representation of maliciousness is a temporal characteristic.

Chapter 10:

The case study of chapter 10 is “Network Traffic”. As for the DNS case, the chapter discusses how hard is to differentiate benign for malicious traffic. The book presents the WannaCry malware as an example, in which the sample was identified by resolving the “kill switch” domain. It is hard to assign maliciousness to it since DNS resolving is not a malicious action by itself.

I liked the chapter, but, overall, I think it is too much focused on botnets, and did not cover other aspects of network traffic that could be even related to botnet/malware, such as downloaders, exfiltrators, and so on.

Chapter 11:

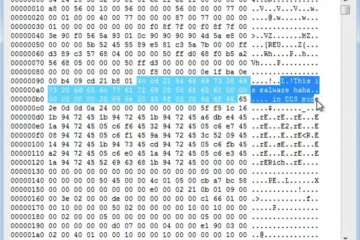

This is the chapter that talks about “Malware”. As a malware researcher, it is was pleasant to see malware discussed. I’m trying to not be pedantic on the evaluation of this chapter since it is only a chapter and not an entire book about malware.

The authors define malware in terms of behaviors. I like it, this is the way my advisor defended in his thesis it should be (link here), and this is likely the best way we can define malware right now. Despite that, this definition has many implications that I hope I can discuss in future posts. Moving forward, the book states that we do not have a clear definition of what is malware, and I not only fully agree with it but also defend that this is the reason for multiple controversies in the field. The book points out that, in general, there are external people labeling and thus defining what is malware. This is real, so I ask myself if is it even possible for researchers (us) to agree on what they are detecting with their (our) tools. The problem of external definitions is also present when we consider AVs. I agree with the book when it says that they are “black box tools” and I defend we need to open them to have a greater understanding of malware detection.

The authors also state that malware change according to the years. I also agree, and I think Fabricio, our specialist in concept drift (link here) will agree even more. As for malicious domains, I believe that malicious behavior in software might be temporal. There might be even ambiguous cases, which the book calls “Good Bad Behavior”. I liked reading it because it reminded me of my advisor calling these cases “benign malware samples”.

Chapter 12:

This is the final chapter of this book and it covers human aspects. This is the security aspect I’m less familiar with and I believe most people are as well. Thus, this would deserve an entire book. The part I most enjoyed in this chapter was the suggestion of a way to conduct research: “develop research as software, dividing it into small parts”. I would complement it with writing tests as well. It is significantly different when you know what you will measure and when you discover it “on the fly”. The discussion about how to develop research is good to the point I believe it should be extended to other parts of the book. I think that we must overall reason more about the research works we develop. It is interesting to read that to the authors good scientific questions are the narrow ones, which answer simpler but more direct questions. I have to admit that, in the sense presented in the book, I make very bad questions. I tend to present the broad and open questions to the narrow ones, thus I’m developing a thesis on “how to detect malware”, which is far from simple and direct.

The book introduces some studies correlating security with personality traits. I think it is a very interesting subject and one of those that I knew little about until recently when I saw the first works of my author Daniela (link here). The book made a challenging question, almost a moral dilemma, would it be OK to profile employees’ personality traits to increase security? I think people could start an entire Ph.D. on it.

Overall:

My verdict (so pretentious!) is that this book is worth reading, to the point that I thought it was worth writing this blog post. I’m just unsure whether this book will get the practitioner’s attention. Not because it is not interesting, but because many of my friends might still think it very dense in theoretical discussions. In any case, this book is a good introduction to many aspects of security, which will certainly help newcomers. Despite that, I still think that many of the philosophical questions are better experienced if you have some previous background in them. This was my case and thus this might be the reason I appreciated it.

More about:

Thank you if you survived until here. If you still want to know more, my Ph.D. defense is approaching and I plan to discuss a bit more in it about all the aspects mentioned here. I’ll let you all know.